Jiacheng Zhang 张佳程

Hi! I am a third-year PhD student at the University of Michigan, Ann Arbor advised by Professor Steve Oney.

I earned my Bachelor's degree in Computer Science with Summa Cum Laude from University of Michigan. I also obtained another degree in Electrical and Computer Engineering from Shanghai Jiao Tong University. During my undergraduate years, I was advised by Prof. Andrew Owens in multimodal learning and computer vision.

!

!

My research aims to improve Human-AI interaction by identifying and addressing usability and conceptual gaps between users and the AI systems they interact with. My recent research explores AI-assisted web agents that collaborate with users to handle online tasks such as multi-target manipulation, information retrieval, and decision-making.

Multi-Click: Cross-Tab Web Automation via Action Generalization

Multi-Click enables users to simultaneously perform the same action (e.g., clicking or typing) across multiple pages while maintaining the immediacy and understandability of direct manipulation.

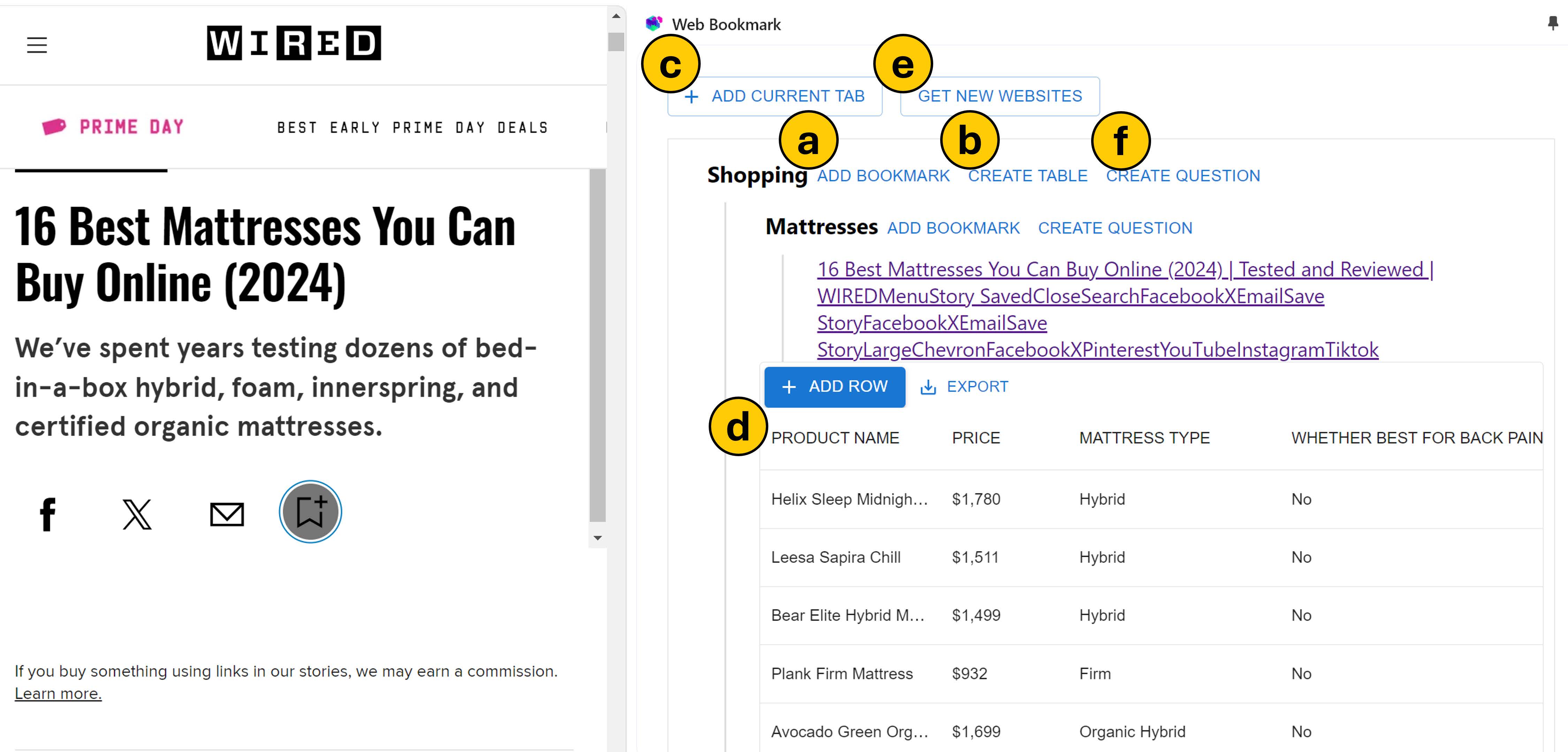

WebMemo: A Mixed-Initiative System for Extracting and Structuring Web Content

Through proactive and flexible data collection based on high-level user input, WebMemo reduces the cognitive load and manual effort required for managing web content.

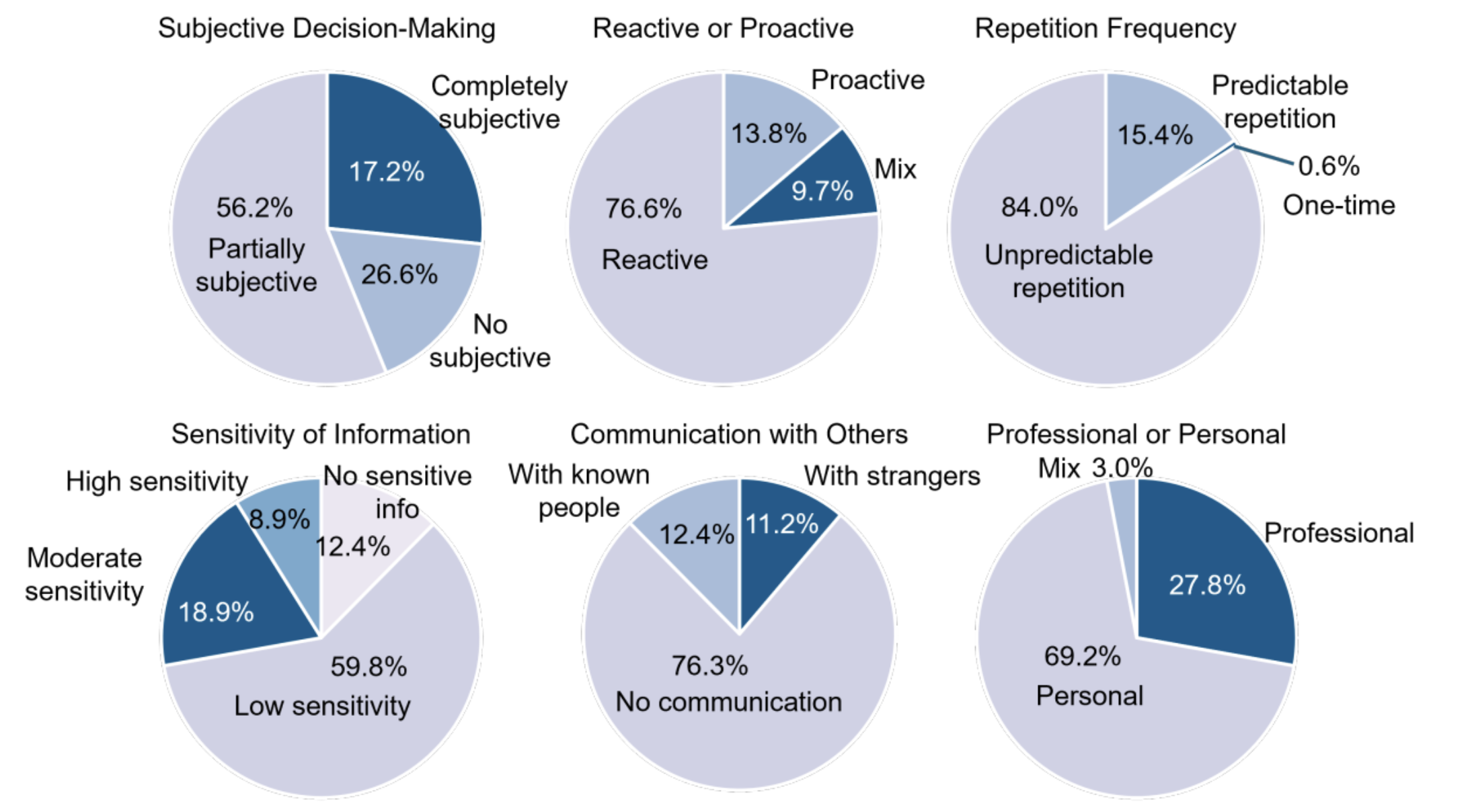

Understanding Challenges and Needs of Using AI in Web Automation Systems

Understanding the challenges and needs of using AI in web automation systems through user studies and analysis.

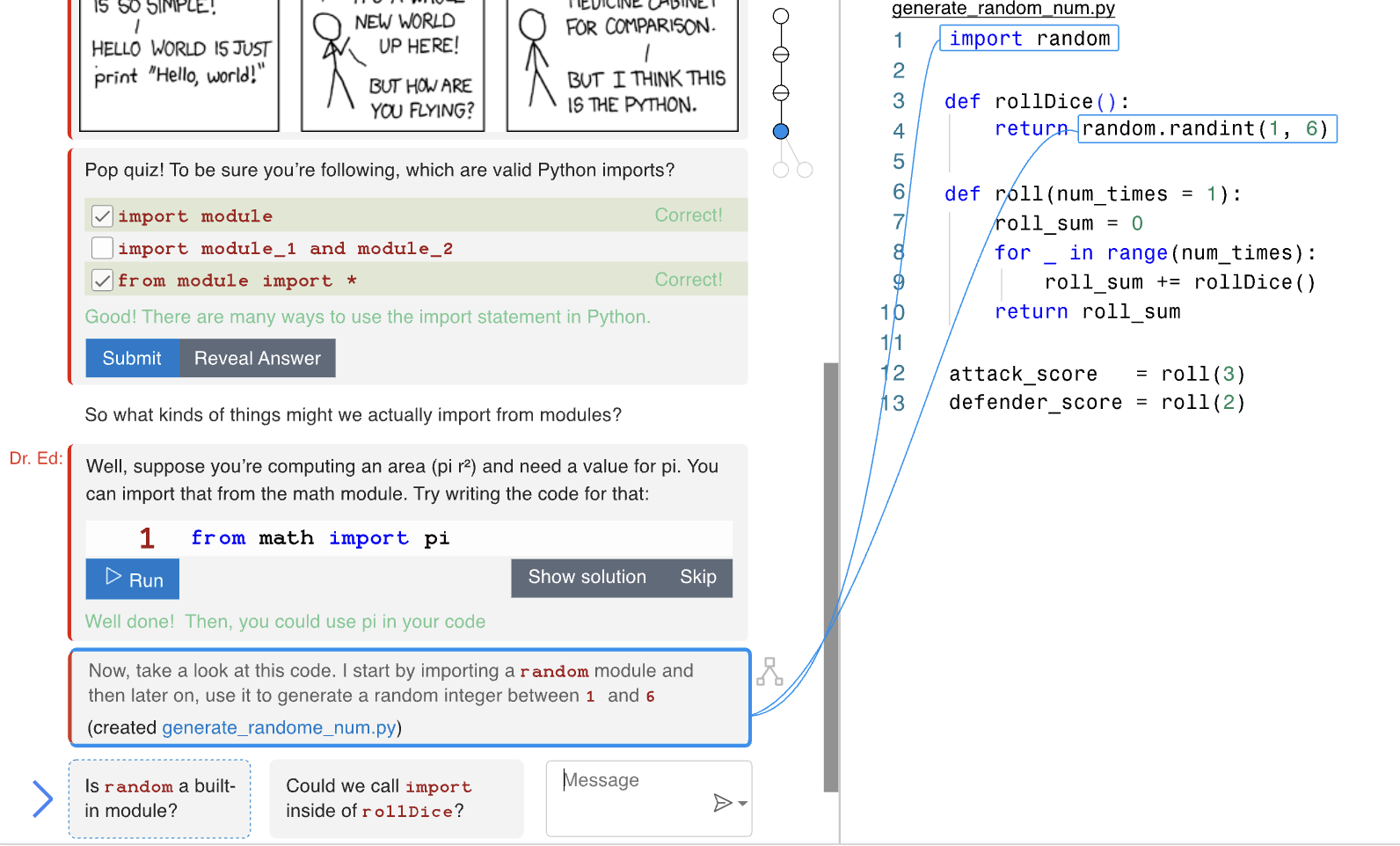

EDBooks: AI-Enhanced Interactive Narratives for Programming Education

AI-enhanced interactive narratives for programming education.

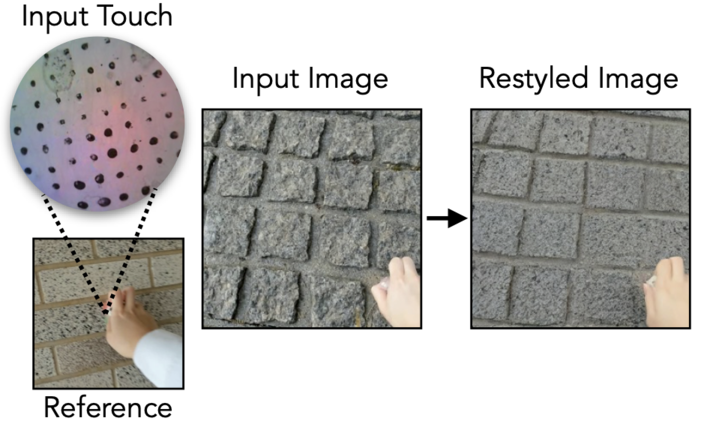

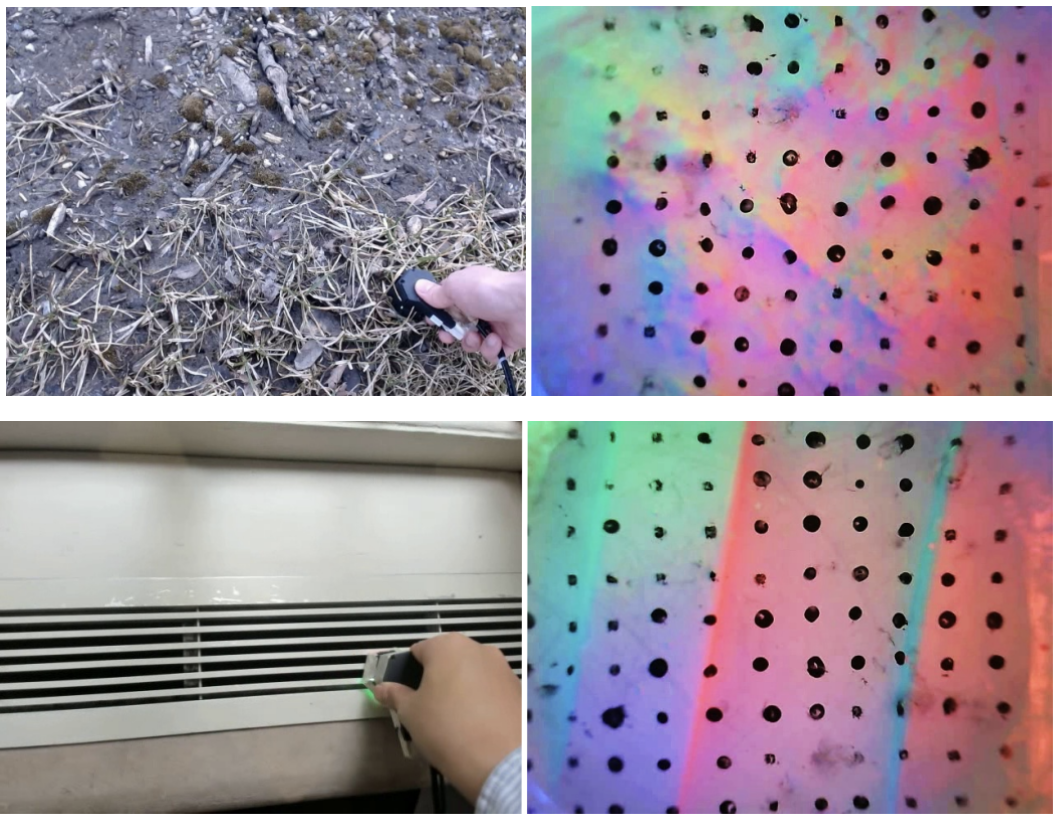

Generating Visual Scenes from Touch

We use diffusion to generate images from a touch signal (and vice versa).

Touch and Go: Learning from Human-Collected Vision and Touch

A dataset of paired vision-and-touch data collected by humans. We apply it to: 1) restyling an image to match a tactile input, 2) self-supervised representation learning, 3) multimodal video prediction.

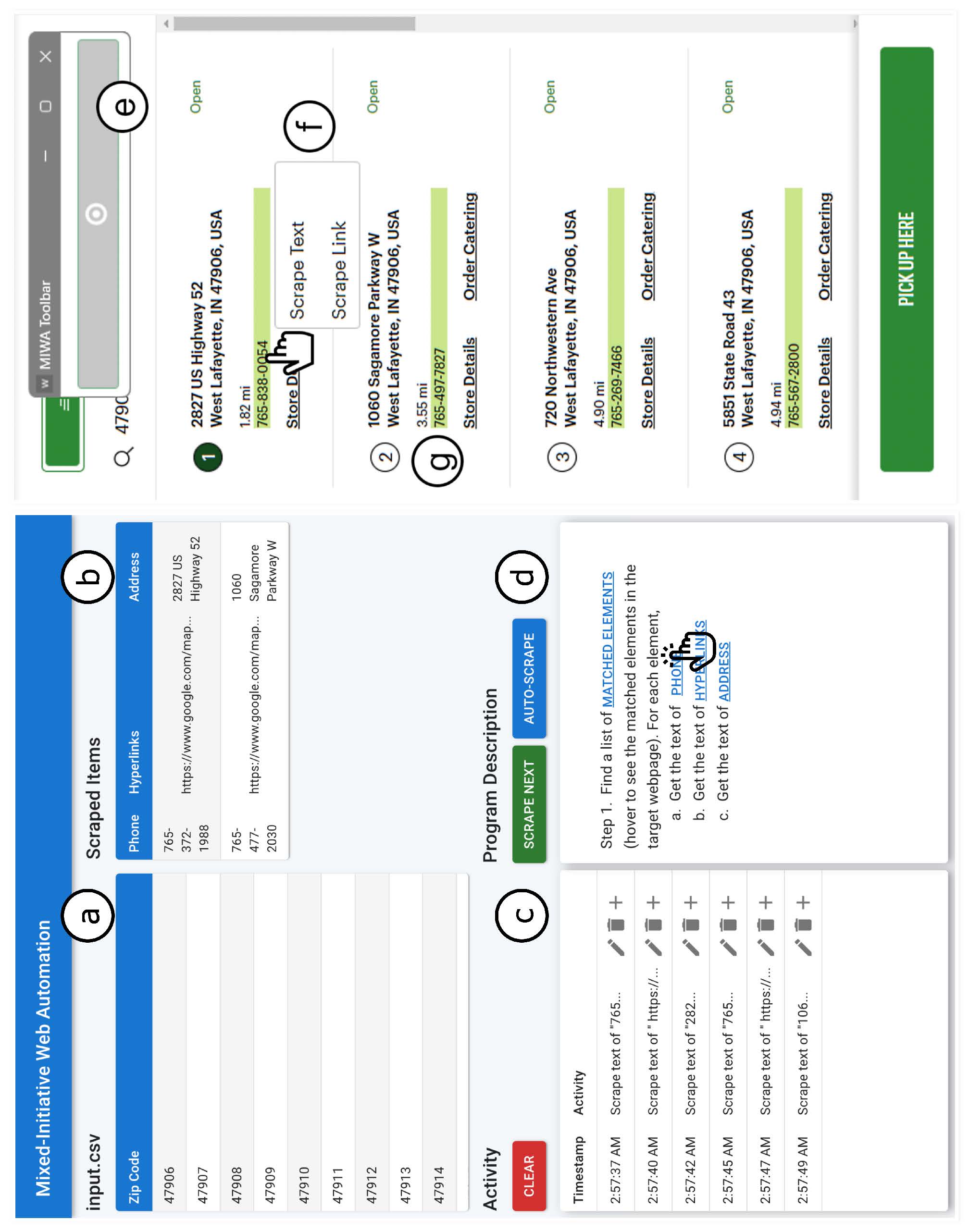

MIWA: Mixed-Initiative Web Automation for Better User Control and Confidence

We provide MIWA, a mixed-initiative web automation system that enables users to create web scraping programs by demonstrating what contents they want from the targeted websites.

- Britpop enthusiast — Oasis, Blur, Coldplay

- Dota 2 when not researching